Documentation for the Music Text

Content

1. The FreiDi Edition Concept

The concept „Edition“ in the FreiDi project has a specific meaning not to be confused with that of a traditional analog edition whose primary objective is to establish a scholarly or critical edition of a work. Instead, the project follows the multidimensional model eof an edition, as formulated by Frans Wiering in 2009 (Digital Critical Editons of Music: A Multidimensional Model, London, 2006 / Ashgate, 2009; → PDF to download). There, the basis for any such work is a comprehensive data collection in various domains and the versatile linking of their respective items. It is important that the items be available not only as illustrations (thus, for example, as facsimiles or in newly-set music texts), but also in their logical domain (that is, music and texts in the form of encoded contents) – adding for music also the acoustic/performative domain. From this base, depending on the user’s knowledge interest, these materials can then be pursued along different paths, including an attempt to establish an “edition” or more accurately, editions, since different viewpoints can or must lead to mutually differing edition results. The project was mainly about demonstrating these possibilities without presenting in itself a critical text – this task was left to the parallel analog edition of Freischütz within the WeGA framework where a single “authoritative” text is created, whereas in FreiDi it was a matter of propagating a more open text concept, of documenting not only the authorized but also the posthumous reception of the text, and of drawing attention to changes in understanding the text or to specific problems by being conveniently able to compare sources. The structural systems of correlating data items with innovative forms of visualization were therefore the focus of the project’s efforts.

2. The Edition Concept

A primary objective of the music edition within the Freischütz Digital (FreiDi) framework was to show the potential of completely encoding the musical contents and linking them with further edition components. During work on the project it became clear that the effort and expense involved in encoding is much greater in praxis than was originally expected. The complete capture of all available sources was accordingly given up, so that the capacities released could be invested in developing tools to reduce this effort significantly. From the sustainability perspective, the project’s focus was on workflows that could be used by other projects, thus enhancing their efficiency, whereas the encoding as an equally re-usable project result was quantitatively restricted. In the process, the opera was maintained in both longitudinal and cross sections, that is, on the one hand, the entire work was captured in one source (the autograph), on the other, individual movements were documented with their varying details in all extant sources [we encoded the autograph completely, together with 3 numbers in all sources]. This selection makes it possible to show all editorially relevant phenomena and their implementation in the digital edition, thus avoiding a qualitative restriction on the encoding. The digital music edition of Weber’s Freischütz is based on a comprehensive encoding of the musical sources with MEI. The sources considered are captured in a delimited diplomatic transcription identifying writing habits pertaining, for instance, to abbreviations and writing them out as needed, the orthography of dynamic markings and notation forms of several voices combined in one staff (notes separately stemmed vs. spelled out as a chord). These encodings are linked with associated facsimiles horizontally bar by bar , or vertically for each individual staff, but are interrelated with each other down to the individual detail. In so doing, evaluating the sources does not lead to different treatment – later copies and prints are as deeply explored as Weber’s autograph.

From this data plexus the development of the work text can be precisely reconstructed in every detail, thus displaying each variant in both the facsimile and in a diplomatically faithful transcription. The edition’s user can freely select the granularity for comparing the two texts: whether, for example, differences in pitch and duration are to be taken into account, or writing habits are to be compared with each other. Here then, Freischütz offers interesting possibilities for evaluation, so that, for instance, where extant for two copyists are several copies, investigations can very conveniently be carried out by comparing one copyist’s several copies, leading to anticipated new findings.

As mentioned, the project consciously dispensed with the creation of an edited text, unlike in conventional edition concepts, since the actual aim of this kind of edition is documenting the text development , well beyond its original composer’s supervision.

3. The Core-Concept

The encodings of the Freischütz musical sources follow the so-called Core concept. Basically, a distinction is made between substantial and accidental information, each of them to be treated in different ways. Substantial information includes, for instance, pitch and duration, articulation directives, or coverage by individual slurs. Accidental information, on the other hand, refers to the kind of beaming, individual abbreviations in the various sources, or the direction of a slur (above or below the staff. Both categories are captured using MEI, but are stored in different files. The first is a so-called core file where all the substantial information of all sources considered is filed. Where sources differ one from another, this is captured by means of <mei:app> or <mei:rdg>>, i.e., the two (possibly even more) alternative readings are encoded locally to the variant location, and a reference to the corresponding sources established:

<!-- Datei core.xml: -->

<app>

<rdg source="#A #KA1">

<note pname="c"/>

</rdg>

<rdg source="#KA2">

<note pname="d"/>

</rdg>

</app>

<!-- This highly simplified example implies that the sources A and KA1 reproduce a note "c" wiedergeben, whereas source KA2 has a "d". -->In order to restrict the differences captured among the sources to what is meaningful, only the most important parameters of the music text are captured in the core file. This file facilitates only a meaningful reproduction of the source, not a diplomatic transcription. For this reason, there is an additional separate file for each individual source, describing the predominantly graphic aspects of the music text. However, in order to avoid duplicating information, these files do not include the encoding of substantial information but are referencing the corresponding spot in the core file. In a simple example of a note stem’s direction, this concept can be illustrated as follows:

<!-- Datei core.xml: -->

<note xml:id="qwert" pname="c" oct="4" dur="4"/>

<!-- Datei sourceA.xml: -->

<note xml:id="asdfg" sameas="core.xml#qwert" stem.dir="up"/>

<!-- Datei sourceKA1.xml: -->

<note xml:id="yxcvb" sameas="core.xml#qwert" stem.dir="down"/>In the core file the note is captured as "c" in the fourth octave (thus in German, a middle c, i.e. c') ), of quarter-note duration. The source encoding refers explicitly to this note and supplements the information that the note stem is directed upwards (source A) or downwards (source KA1). This makes it clear how less significant deviations can be stored in the source encodings without requiring a separate <app> in the core-file.

For analytical purposes, the information in the core file suffices, but the two files need to be combined for an (approximate) diplomatic reproduction. Captured only in the source file are also, among others, abbreviations, i.e., reproduced there are abbreviated bar-repeat signs, colla-parte directives, tremolo performance signs and other abbreviations symbols as notated in the source. These individual abbreviations are then also indicated in the written-out form as an alternative in the source file: The encoding specifies that there is a repeat-bar sign in the source whose resolution is four notes. These four notes are then, as before, linked to the core file. In addition, all resolution notes are linked to their models:

<!-- Datei source.xml: -->

<choice>

<orig>

<mRpt/>

</orig>

<reg>

<note sameas="core.xml#…"/>

<note sameas="core.xml#…"/>

<note sameas="core.xml#…"/>

<note sameas="core.xml#…"/>

</reg>

</choice>The encoding specifies that there is a repeat-bar sign in the source whose resolution is four notes. These four notes are then, as before, linked to the core file. In addition, all resolution notes are linked to their models:

<!-- Datei core.xml, Takt n: -->

<note xml:id="c1" dur="1"/>

<!-- Datei core.xml, Takt n+1: -->

<note xml:id="c2" dur="1"/>

<!-- Datei source.xml, Takt n: -->

<note xml:id="n1" sameas="core.xml#c1"/>

<!-- Datei source.xml, Takt n+1: -->

<choice>

<orig>

<mRpt/>

</orig>

<reg>

<note xml:id="n2" corresp="#n1" sameas="core.xml#c2"/>

</reg>

</choice>This shows that even in such a trivial case, combining abbreviations and the separation of core and source files leads to considerable markup with a large number of cross-references. This complexity results, however, from encoding requirements and cannot be changed fundamentally by other encoding concepts. The main advantage of the core model is a fairly clean separation of the “core content,” together with the graphical manifestations of the individual sources, facilitating the identification of the significant deviations. At the same time, e.g., the abbreviation notation in the individual sources, and a comparison of the graphical peculiarities (via the detour in the core file) is still possible.

4. Tools

Various tools have been developed for implementing the data model. These are divided into tools with a graphical user interface to visualize certain information and thus facilitate the data processing, and tools to use without a graphical user interface.

- MusicXML to MEI

- MEI Cleaning

- Edirom Editor

- Include Music in Core

- Include Music in Sources

- Merge Core and Sources

- Split in Pages

- PMD PitchProof

- Add IDs and Tstamps

- Check Durations

- Lyric Proof

- Concatenate Pages

- Shortcuts

- PMD ControlEvents

- Recore

MusicXML to MEI

MusicXML files, created in collaboration with the Carl-Maria-von-Weber-Gesamtausgabe (WeGA) from Freischütz-Finale-files, are used as the basis for encoding the score texts and serve there as the raw model for editing the edition’s text (the edited texts of WeGA are later prepared with the program Score). These files essentially follow Weber’s autograph, but they write out all the abbreviation notations and already contain first editorial addenda. After export to MusicXML they are converted from partwise MusicXML to timewise MusicXML with the XSLT made available by the MusicXML community, and then converted to MEI with the XSLT made available by the MEI community.

MEI Cleaning

The generic conversion from MusicXML to MEI leaves some unwanted artifacts in the data. For example, graphical information on the positioning of individual signs is found, but it does not describe their position in the autograph, but within the Finale file. Since this information is not needed in the project, an XSLT (improveMusic.xsl) was developed to clean the MEI files first and discard all graphical information. Furthermore, it recognizes, inter alia, the incorrect encoding of tremolos (which are captured purely graphically in Finale, that is to say as notes with a line through the stem) and replaces this with the functionally correct encoding via <mei:bTrem> or <mei:fTrem>. Furthermore, certain structures are standardized and reliable xml: ids for bars are applied.

Edirom Editor

Edirom Editor is used, first of all, to edit the individual Freischütz sources in the usual manner and export them to Edirom Online. These files are then processed with a separate XSLT (improveEditorExport.xsl). Set up in this step, are, inter alia, reliable xml:ids for bars, besides adding backlinks from <mei:zone> to <mei:measure>. At the same time, XSLT creates a (temporary) file to be used to monitor the bar counts of the different sources, and then to ensure a concordance of the sources on the bar level by using, if necessary, two other XSLTs (recountMeasures.xsl and moveCoreRefs.xsl). This concordance comes about in the process only by using the identical bar numbers.

Include Music in Core

Correlated in this step are the two heretofore separate databases, the Finale-based encoding of the music text as well as the editing with Edirom of the manuscript on the bar level without musical content. Note that the music encodings occur only movement-by-movement, but Edirom Editor prepares an encoding based on the complete sources. Accordingly, the reliable XSLT (includeMusic2core.xsl) creates a Core file for this step, simply describing the just prepared Freischütz movement [i.e. one movement]; thus, further work steps are temporarily movement-based.

The XSLT uses the xml:ids of the bars which have been normalized in the previous two steps in order to synchronize the music-text encodings with the files from Edirom Editor and to set up a new encoding. In the process, the accidental information from the sources is overruled and a Core file is set up (see the Data Model). This Core file is free of all links to the facsimiles of the sources. At the same time, a “blueprint” is set up, i.e., a temporary file used as a model for encoding the individual sources. This blueprint contains the accidental information discarded in the Core and produces the correlations described in the data model between source encoding and Core file.

Include Music in Sources

HHere the “blueprints” created in the previous step with the files from Edirom Editor are actually merged and a combination of facsimile referencing, references to the Core file, and local encoding of incidental information is achieved. The Core concept described in the Data Model is thus first implemented at this point. The XSLT (includeMusic2source.xsl) used for this simultaneously creates facsimile references for each <mei:staff> element, i.e., calculates for each staff within the score, based on the scoring and dimensions of the whole bar, in which facsimile section it is to be found. This calculation is based on general assumptions and in cases of a very irregular typeface does not find the ideal page excerpt, but has proved to be very successful in the vast majority of cases and is to be preferred over time because of a sufficient quality in an extremely time-intensive manual capturing of these excerpts.

Merge Core and Sources

After the data in the Core model exist, the data is checked from the sources. As mentioned, the basic encodings correspond approximately to the autograph, but reproduce this after all the abbreviated forms are written out. Therefore, in a first proofreading step the data must be adapted not only to the respective source, but all “imperfections” in the source must also be reconstituted.

Proving impractical has been the project’s first attempt at implementing these measures via a graphical user interface, but with direct access still to the Core model. Due to the need to keep track at all times of source encoding and Core files, the complexity of this step increases to a degree that volatility errors beyond what is tolerable become unavoidable. For this reason, another approach was used to check the data. To do this, the just induced structure is again dissolved, and the substantial information of the Core file is taken over into the source files via XSLT (mergeCoreSource.xsl). These now exist in a meaningful file, i.e., complete in itself. However, the @sameas relationships of the individual elements remain in the Core, so that the possibility of returning to the Core is available at all times. [NB: We do not, in fact, need these references anymore, but at that point in time this was not yet foreseen in the project.]

Split in Pages

In order to facilitate proofreading the data, the movement-by-movement encodings of the individual sources are now dissolved and transferred per manuscript or printed page into individual files. This step is technically justified and facilitates the reading and storage of the data in the following working steps. The XSLT (generateSystemFiles.xsl) used for this purpose automatically generates annotations containing current information on the score (<mei:scoreDef>), in which all clef changes, altered keys, and currently valid transpositions are deposited.

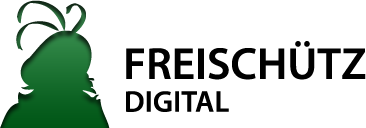

PMD PitchProof

For this step a web-based tool was designed and implemented for use mainly in proofreading pitches and durations (see the Pitch-Tool described below).

At the end of this work step the encodings correspond to the respective sources with respect to notes, rests, and “blank spots.”

Add IDs and Tstamps

Since in the previous step new elements have been created by hand, they do not always have a (sufficiently clear) @xml:id. In this step, the proofread data are then checked by XSLT (addIDs_and_tstamps.xsl) and supplemented where necessary by @xml:ids. At the same time, the corresponding @tstamps (= beats) are automatically calculated and supplemented for events in MEI (i.e., all notes, chords, rests, etc.). With these beats, on the one hand, all ControlEvents (primarily slur placement and dynamic markings) need, on the one hand, to be able to be proofread, on the other, the existing data can be checked for plausibility (see next step).

Check Durations

At this point in the workflow, the plausibility of the previous data is checked with a Schematron file (sanityCheck_durations.sch). For this purpose, the previously computed beats are compared with the current time signature to identify rhythmically “incomplete” or “overfull” bars. Most of these are volatility errors made during the proofreading process, but to a lesser extent actual “errors” in the sources. In both cases, the necessary adaptations of the encodings are made manually in Oxygen, thus assuming a detailed understanding of MEI and a high degree of discipline.

While faulty data are tacitly corrected and adapted to the actual source finding, “errors” in the sources (such as, for instance, superfluous dotting, lacking rests, etc.) are marked as such and meaningfully resolved editorially. For this purpose, the MEI elements <sic> and <corr> are utilized, connected via a <choice>. Thus, necessary at this point are the first explicitly editorial interventions in the encodings.

LyricProof

In a further manual step, the encodings of the text underlay are checked and corrected. For this purpose, an HTML file is generated with a simple XSLT (prepareLyricsProof.xsl) in which only the text underlay is displayed and correlated to the score through diacritical signs (including bar lines and syllable separators). All necessary changes are directly made in the encodings with Oxygen.

Concatenate Pages

Before the next step, it is necessary to revise the previous division into a page-based encoding and to return to an encoding by movement. For this purpose, the individual files are merged with an XSLT (concatenateSystems.xsl)). On this occasion, the music encoding <mei:pb/> element is added, showing the start of a new page and thus facilitating a page-based evaluation of the data.

shortcuts

The second major sub-area of proofreading the data is recording and writing out abbreviations. For this purpose, a separate list of <cpMark> elements is created, describing all abbreviations in the sources. This element is a supplementary proposal for MEI, which has hitherto not been included in the standard but has already been incorporated into other projects such as Beethovens Werkstatt. <cpMark> stands for copy mark as well as also for colla parte mark, since both issues can be captured with this element, created as a control event. The simple case of a bar repeat is thus described as follows:

<cpMark staff="5" tstamp="1" tstamp2="0m+4" ref.offset="-1m+1" ref.offset2="0m+4">//</cpMark>The @ref.offset attribute specifies how far forward the section to be copied is to be found (in the previous bar = “-1m,” there on beat 1 “+1”). The length of the section to be copied is described by the @ref.offset2 attribute whose syntax is the same as @tstamp2 and is specified relative to the beginning of the section to be copied, as described with @ref.offset.

Colla-parte directives are specified with the same element, but with other attributes:

<cpMark staff="5" tstamp="1" tstamp2="0m+4" ref.staff="12" dis="8" dis.place="above">c.B.in 8va</cpMark>Described in this encoding is that staff/@n=5 of beats 1 to 4 are to be moved an octave higher with staff/@n=12. The abbreviations in the sources can be specified as the content of the <cpMark>-element.

The <cpMark> elements are created in a list and refer with an additional temporary attribute to the corresponding beat. This list is produced by hand alone with the aid of the facsimiles, i.e., they are initially separate from the actual music encodings.

When the <cpMark>(s) are created, they are first written out into the actual encodings via XSLT (resolveShortCuts.xsl) in an internal working step upstream in order to be ultimately implemented. The writing out of the abbreviations is carried out in several steps and comprises:

- Writing out tremolo directives (<mei:bTrem> and <mei:fTrem>)

- Writing out bar repeat signs, as well as

- Writing out other colla-parte directives and repeat signs.

For writing out these abbreviations, the original findings (often simply a blank space in the manuscript, i.e., a <mei:space>) is encapsulated into a <mei:orig>, in which the notes to be adopted are added in a <mei:reg>. Both are juxtaposed in a <mei:choice>, allowing comparison of the original source score and the completed musical score for a performance. The notes generated when the <mei:reg> is compiled always receive a reference to their respective models to enable tracing the respective origin for each note in the score. In this case, multi-level abbreviations are also correctly written out (for example, a multi-bar colla-parte directive, where bar repeats occur in the copied part).

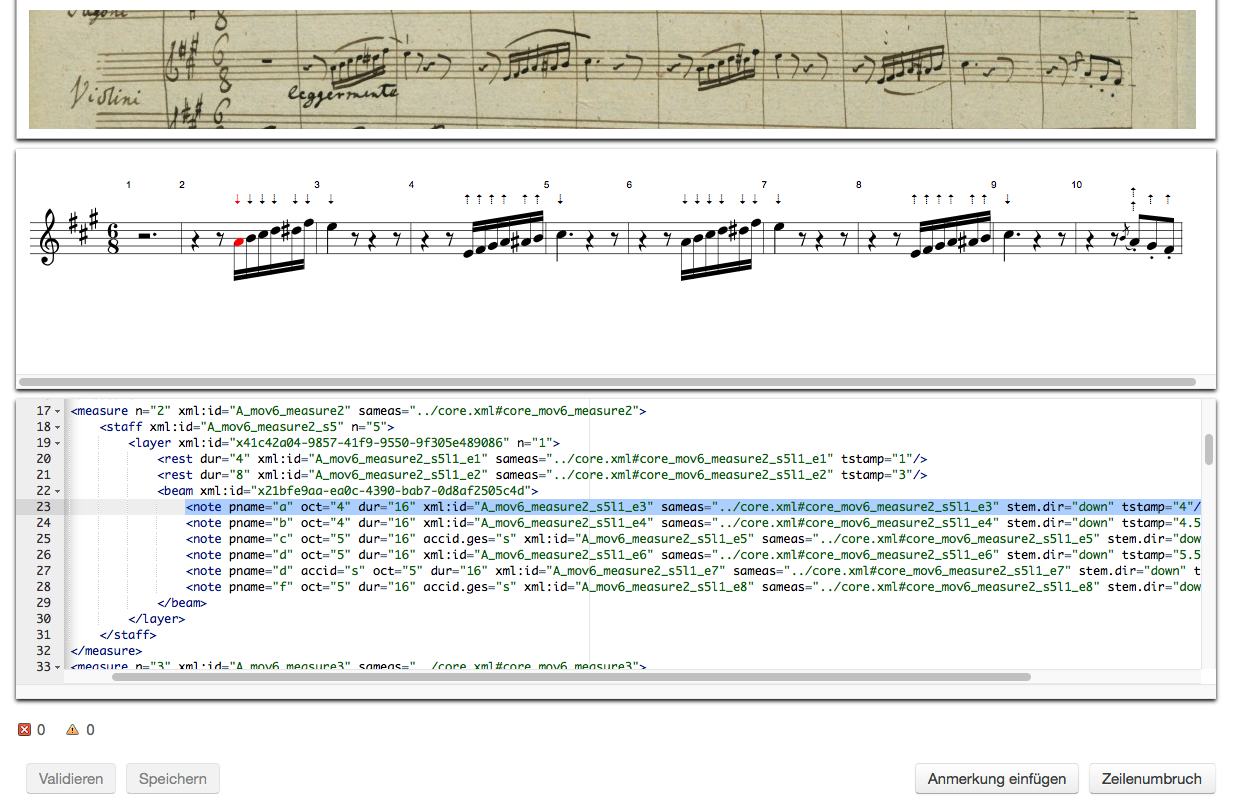

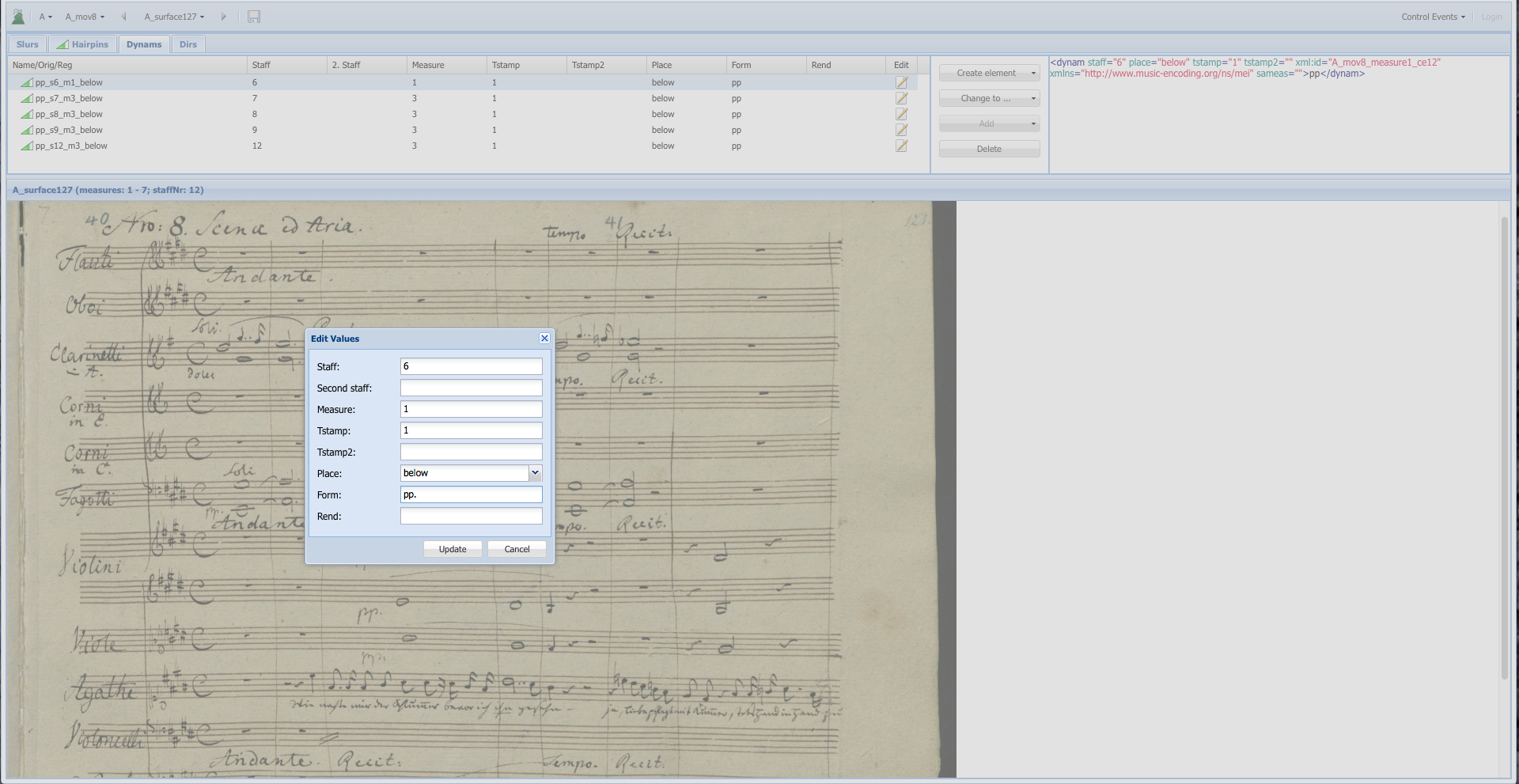

PMD ControlEvents

Here, the control events taken into account in Freischütz Digital are proofread using four separate tools: ties (<mei:slur>), (de)crescendo hairpins (<mei:hairpin>), dynamic markings (<mei:dynam>), as well as performance directives (<mei:dir>).

The tools are supposed to support the exact matching of the code to the respective source. This is done by the PitchTool and the ControleventTool.

PitchTool

The PitchTool consists of a three-part arrangement of the individual staffs: The section of the respective source is matched with the rendered code. Changes are entered directly in the code section. Besides the addition and deletion of <note>, <rest>, <clef>, <space> und <mRpt> elements, their respective full or half-bar variants as well as <beam>, <tuplet>, <bTrem>, and <fTrem> elements, proofread here are the attributes @pname, @oct, @dur, @dot, @grace, @accid, @artic, @tie, as well as @stem.dir. The arrows above the notes show the encoded @stem.dir in the present example.

ControleventTool

In the ControleventTool, the correction is performed page-by-page to facilitate context-dependent decisions. Relevant are ties, hairpins, dynamic markings, as well as performance directives. Controlevents affected by abbreviations or colla-parte bars are treated as if the relevant places were written out. (A later assignment to the colla parte is possible with the <expan>associated with it.)

Slurs

The encoded <slur> of a page are listed. A selected <slur> element is graphically highlighted in the source and checked for the correct start and end points and correct @curvedir using the rendering. For clear reading, this is done via @startid and @endid of a slur and is automatically defined by clicking on a note in the rendering (highlighted in green). The @curvedir is corrected by way of a dropdown menu. Missing <slur> elements are added and obsolete elements are deleted. In cases of ambiguous reading, two start and end points can be selected, whereby an <orig> element is automatically created for the relevant slur, as well as <reg> elements depending on the number of readings. Given in this case for the <orig> is additionally @tstamp and @tstamp2 of the relevant slur, in order to capture its course without reference to an event.

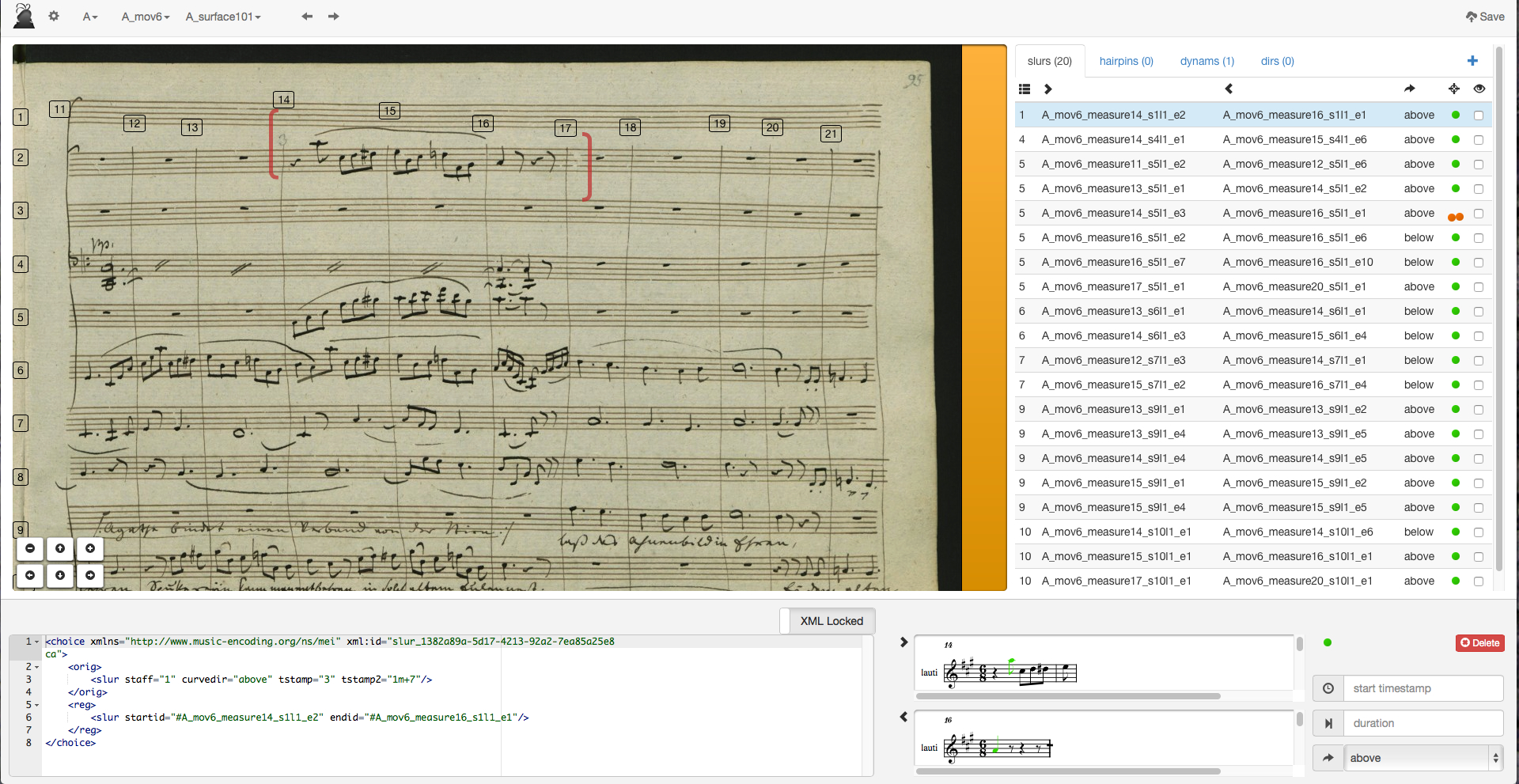

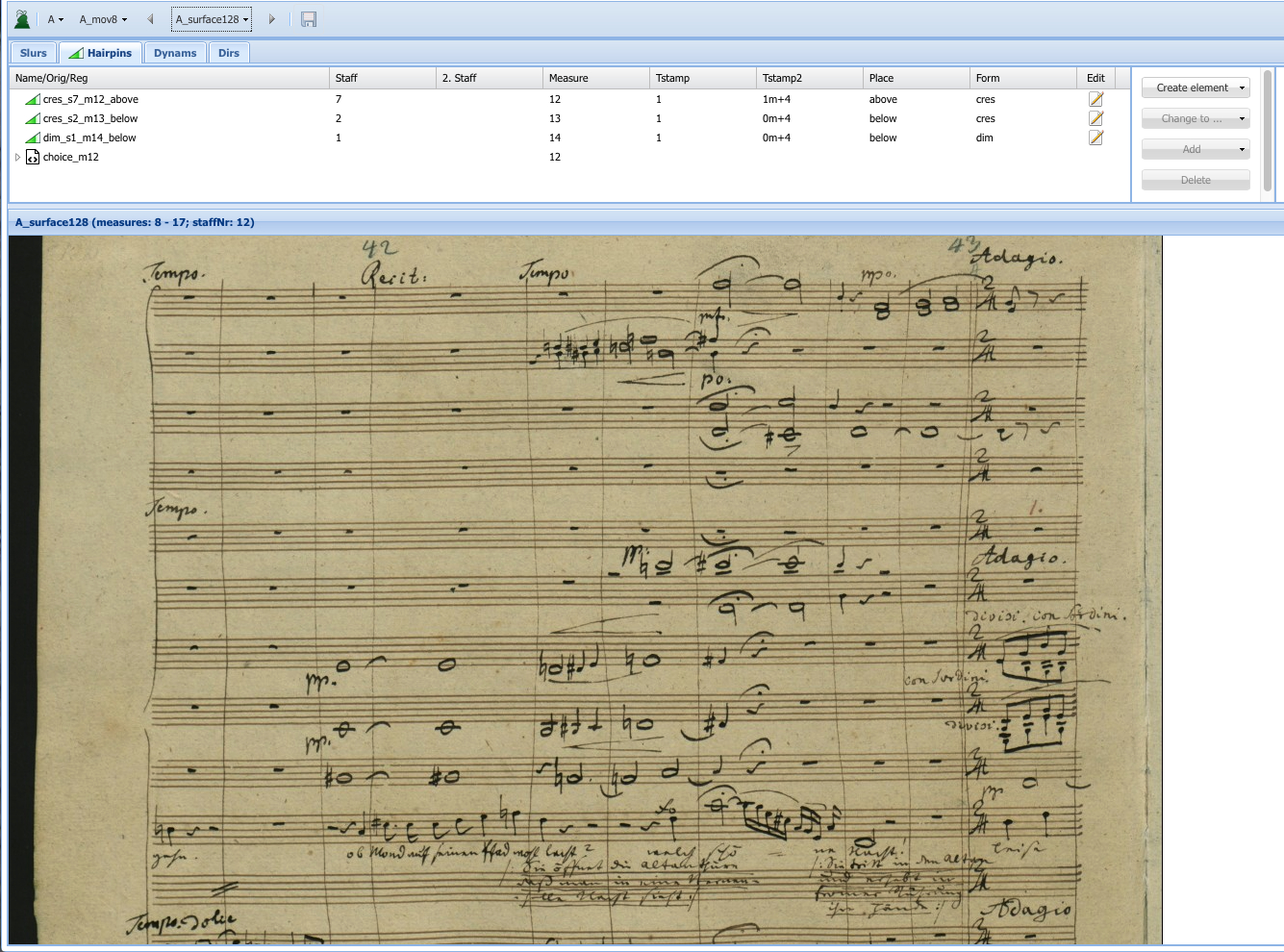

Hairpins

As with the SlurControl, the elements of a complete page are listed here and can be matched, supplemented, or deleted with the source. In the create or edit menu, @tstamp and @tstamp2 of a hairpin, as well as @form, @staff, and @place are to be created and/or corrected. Again, in the case individual attributes are ambiguous, a <orig> plus <reg> can be created for the respective hairpins via change-to.

Dynams & Directions

The <dynam> and <dir> elements are also listed and compared page-by-page. The checking is carried out as in the case of hairpins. In addition, the corresponding text (even using the <rend> element for underlined text) must also be inserted or corrected.

It is important in this case that the control events resulting from abbreviations are also added in this step since these frequently exceed the boundaries between the existing and generated music text and cannot be automatically generated reliably.

At the end of this step, full MEI encodings of the sources are presented, largely reproducing these sources diplomatically, alternatively filling out abbreviations and further gaps in the music score, and incorporating as well a first editorial treatment of the respective sources.

AddAccidGes

Before the automated merge of the data, it is necessary to add the sounding pitch of each note. For this purpose, a separate XSLT (addAccid.ges.xsl) is used to create an @pnum attribute for each note, which, in addition to the direct pitch, takes account of the interim change of key signature or local accidentals as well as transpositions and octaves.

Recore

In the temporary last step of data preparation, the verified data is again transferred to the Core model, i.e., a separation between substantial and accidental information is undertaken (see Data Model). For this purpose, a new Core file for a movement is created via XSLT (setupNewCore.xsl) with the first completely verified source of this movement, as well as the encoding of the source “depleted” concordant with the data model, so that retained is only the accidental information.

With another XSLT (merge2Core.xsl)), the other sources are then aligned with this new Core file and the differences are automatically captured in a two-step process. Initially, XSLT provides a list of the differences found, which must then be checked manually using the encodings and facsimiles. XSLT is used productively only after possibly incorrect data has been cleared up. In this mode, <mei:app> elements are automatically created with <mei:rdg> for each reading undertaken, and the necessary references from the source files are generated in this Core file.

This step is extremely critical, since, on the one hand, possible encoding errors must be recognized as such, on the other, facile use of the data can lead to data that owing to their scope and complexity can only be corrected afterwards manually. This XSLT’s claim is extremely ambitious, since the similarity of the sources is evaluated in terms of content, that is, different notations of the same content are also recognized as such, for example, in the case of wind parts that may be shown as a chord in one source, but as two parts in another source. Despite equal meaning, the encoding of these sources differs so markedly that an automatic comparison is anything but trivial. After differences are identified, they are reproduced as locally restricted as possible, i.e., distinguished are not only complete bars, but targeted are only the actually variant areas. This automatically achieves an encoding complying with the usual style of manual encoding. In addition, annotations are created automatically, phenomenologically describing the respective variance, and these can serve as a model for explaining the phenomenon editorially (applied manually or coordinated).

4. Guidelines

As part of the work, it has been shown that editorial guidelines in the form of encoding guidelines for such digital projects are a prerequisite for effective work. The openness of the XML languages TEI and MEI, allowing different forms of encoding for similar matters and meeting the specific needs of the individual projects, proves to be problematic, especially in collaborative projects, if no agreement is reached from the outset on preferred solutions. When this is not the case, subsequent processing steps often have to take into account such an abundance of alternatives hindering the project’s rapid progress.

For this reason, the digital edition of the Weber complete edition has worked out for its TEI encoding such Encoding Guidelines for the text parts, currently still available as descriptive guidelines on the project’s website, but to be incorporated into an ODD document. For FreiDi these encoding instructions were largely adopted in the text parts and supplemented by specific features of the project (see the Documentation for the Text), but the encoding principles were developed for music encoding only in the course of the work and had to be repeatedly modified or expanded in order to meet necessary conditions of the Core concept. Since the music encoding was in the hands of a small number of co-workers and also occurred in close consultation with the MEI community, documenting the guidelines for the work was not required in the project itself. It would make sense to develop such guidelines, however, as soon as further editions are to be produced in this form, though orienting to the FreiDi project’s solutions is easily feasible insofar as the MEI files from FreiDi are published via GitHub.

5. Workflows (from Work Packages)

The starting point for developing the database in WP1.1 was, on the one hand, the raw music-notation data from the overture and numbers 1-16 that served during the course of the project as editing models in collaboration with the Carl-Maria-von-Weber-Gesamtausgabe (WeGA); these data originated partly in Finale, partly in Sibelius, and were now converted via the intermediate format MusicXML into a MEI basis, which in later steps was to be enriched by MEI-specific features and adjusted to the contents of the sources used. These were, on the one hand, Weber’s completely encoded autograph, on the other, in the case of the numbers 6, 8 and 9 selected, the established authorized copies and the posthumous first edition of the score (for details see WP 1.3 and WP 3).

The most labor-intensive part of the project was associated with WP 1.3: the MEI encoding of the music texts based on the transformations from MusicXML. Concepts were developed and tested together with Raffaele Viglianti for the further enrichment and correction of the data in MEI. At first, the focus was on the autograph. In a second step – after an intermediate evaluation of the encoding work – a concept for encoding the written sources was developed and then the encoding of numbers 6, 8 und 9 was adjusted for the eight score copies and the first edition of the score of these numbers. The need to “proofread” these codes quickly showed the limits of a checking process based solely on the code – the time expenditure is extraordinarily high due to the code’s lack of transparency (despite the principle of “human readability”) and would not have been feasible within the project’s duration for all encodings. Moreover, the consciously offered alternatives of encoding the same facts without stringent editorial guidelines lead to different solutions further complicating the correction process. Early conclusions were therefore drawn and internal proofMEI-data-Tools developed based on partially visualizing the encoding, among them the above-mentioned pitch-control tool and the controlevent tool. WFurther test routines were carried out using specially developed XSLTs (see above), such as the addition of sound pitches (gestural) for transposing instruments. In addition, Schematron rules for verifying tone duration and meter were developed for carrying out a further correction process to ensure quality control.

For using in the initial phase, the pitchtool the data had to be transfered into an intermediate format (abcjs or VexFlow), for presentation. This necessitated re-transforming or re-saving the MEI data when revisions were necessary in the code window – establishing a clean workflow in this case also proved to be time-consuming, but was, on the other hand, compensated for by easier processing of larger data volumes. It was only during the last project phase that the Verovio Library (Laurent Pugin) ) became available, making such a checking tool much more effective; in a currently prepared version of the MEI Score Editor, the methods practiced in FreiDi are applied in a modified form, so that a broadly usable pitchtool, well integrated into the workflow of developing digital editions (see also the latest version of MEISE), is likely to be available soon.

Among the tasks to be dealt with in addition to the encoding was also the exploitation of all the sources relevant to the edition as facsimiles up to the level of the individual bars by saving all the position data in the MEI encoding.

Beforehand, connecting the Edirom tools to the TextGrid Repository had been planned in WP 1.4. Possible interface functions for implementation were coordinated with representatives of the TextGrid-Project (Würzburg and Göttingen). Due, however, to the conceptual changes of the interface functions in TextGrid Repository, it was only possible to connect the data to the repository with the first pre-release 0.8.0. After a test phase, the consortium decided unanimously to separate the data backup in the TextGrid Repository from the actual data presentation (which is largely done with Edirom) in order to guarantee the user a faster speed as well as also better availability when retrieving data.

AP 3.1 Development of a Model Digital Edition

In August 2013, the two staff members Johannes Kepper and Daniel Röwenstrunk, presented a concept for dealing with musical variance in conjunction with the MEI developer Perry Roland at the Balisage-Conference in Montréal. Out of this, a so-called „Core“ Data Modell was developed for both music and text editing; responsible for the former was J. Kepper, for the latter, Raffaele Viglianti. Kepper has described this model in more detail in a post on this project page. The starting point was the problem that the detailed recording/registration of variance across sources proved to be impractical in view of the large number of sources to be collated, since already marginal differences quickly lead to a “branching” of the encoding in the sense of the Parallel-Segmentation-Method, creating highly redundant data which makes readability and processing more difficult. The alternative possibility of viewing all sources on their own and capturing them in their own files offers the advantage of having to consider only the uncertainties of a single document in a diplomatic encoding. However, the redundancy problem increases, since all unchanging parts must be separately stored for each source, and data can only be linked from outside via a separate concordance. The Core concept attempts to combine the respective advantages of both methods by placing identical text in a central file and referring to the separate encodings (capturing all singular diplomatic aspects) of the individual sources by bypassing redundant duplications. At the same time, a concordance of the data is generated by means of a largely automatable linking.

Analysis of the encodings, especially of the music texts (but also of the libretto’s textual aspects), unexpectedly produced complex issues or requirements for the encoding model. This led to the need for developing numerous technically supportive, semi-automatic workflows (based on XSLT, Schematron, RNG, see the description of the tools) in order to evaluate in detailed comparison the deviations of the sources based on the bar concordance generated. Significant developments in the model had to be concentrated in this area, since these concepts did not make sense without exploring these foundations.

AP 3.2 Development of the Digital Edition

In the course of work, it became apparent that a clear distinction between encoding work in WP 1.3 and preparation of the edition in WP 3.2 is hardly possible. Interpretive editorial interventions are already necessary as part of the source documentation. This mainly concerns the secondary layer of the music text, whose encoding is performed in MEI via so-called Control-Events. For this reason, the proofreading tools had to be expanded to include editorial editing, and the corresponding works, despite their purely documentary character in part, were moved to this WP, especially since due to the developed model the resulting encodings already present a digital edition’s first stage. The utilization of <choice/> was used as a criterion for the definition of WP 1.3 and WP 3.2: This element can be used to capture equivocal source situations, i.e., in the case of ControlEvents, for instance, ambiguous slurs or problems of assigning dynamics.

In this sense, editorial aspects have expanded the ControlEvents proofreading tools, and numerous smaller auxiliary tools based on XSLT have been developed to determine, for example, the respective beats of all notes or other entries in the sources. The following further steps were implemented:

- For abbreviations, such as colla-parte directives and bar repeats, a Stand-off capture was conceived and then the data from the developed model was audited, re-adjusted, and on this basis all corresponding entries in the selected numbers as well as in the complete autograph were captured. This model for capturing abbreviations was then also proposed to the MEI community.

- Also implemented was an alternative automatic writing-out of the abbreviations with simultaneous documentation of the original findings and the reference points to the respective model of the written-out text.

- BBased on the Schematron rules created in WP 1.3, obvious copying or engraving errors were edited in the sources. For example, <sic/> was used to identify rhythmic inconsistencies and the corresponding writing-out via <corr/> (correction recommended), both of which are connected <choice/> element.

- As a preparation for automatically adjusting the encodings of the individual sources, an XSLT was prototypically implemented for determining the actual sounding pitch of each note, taking into account transposition directives, key signature, and relevant further provisions in the course of the movement.

- Furthermore, a semi-automatic comparison of the individual sources was developed using the mentioned central “Core file”, • in which all deviations of the primary parameters of the movements considered are documented as variants, and referred to are all the sources compared in a kind of concordance at the single-character level. The goal was a complete documentation of the source deviations (initially regardless of their relevance to an editorial eye!).

- An alternative and simplified workflow has been developed for those numbers captured exclusively in the autograph, which results in less of an access depth but which spectacularly accelerates the data production, thus offering considerably greater sustainability for other projects.

- Finally, a new prototype for encoding and rendering annotations was to be conceived and implemented. Besides quasi semi-automatic annotations generated using the Core • file, annotations to the numbers 6, 8 and 9 were also adapted from WeGA to illustrate the different role and significance of annotations. The important areas of linking annotations and the role of individual overlapping annotations, for which there are also hardly any digital equivalents in the field of literature, had to be excluded for lack of time.

The project partners are aware that especially in the area of realizing editorial concepts, the analysis of the complex encoding problems has led to the fact that the original idea of providing satisfactory solutions could not be realized. For the project’s purposes, however, the intensive examination of these basic encoding questions seemed to be more important, since further concepts could be developed from this. It can be emphasized against this background that the project has succeeded in furnishing practical proof that in principle conventional collation methods (e.g., with the aid of collateX) also work with music encoding, so that evaluating source comparisons based on automated collation could take place in the future (though still further remote).

In the final phase of the project, a series of texts were written to introduce and explain the edition within Edirom Online, including an extensive chapter on the genesis and transmission of the music, with links to the corresponding WeGA documents, as well as a chapter on the work’s reception.

(Translation: Margit McCorkle ©2017)